To help you, we've compiled a list of 'must-do' activities below that have been found to be essential to successful data migration planning activities. It's not a definitive list, you will almost certainly need to add more points, but it's a great starting point.

Please critique and suggest any additions using the form at the end of the guide (your name/link will be referenced in the guide).

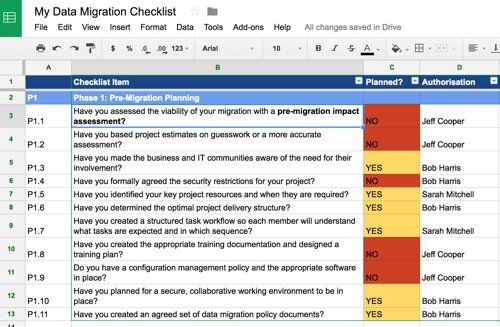

Use the data migration checklist below to ensure that you are fully prepared for the data migration challenges ahead.

Please note, if you prefer to have a spreadsheet and mindmap planner, instead of the text-only format on this page, simply enter your details below and we’ll email it straight to you.

Serious about delivering a successful data migration?

Download the exact data migration checklist toolkit I use on client engagements and learn advanced tactics for data migration planning.

✅ Project Planning Spreadsheet (for Excel/Google Sheets)

✅ Interactive Online MindMap (great for navigation)

✅ Pre-populated example templates (help you get started quickly)

Most data migration projects go barreling headlong into the main project without considering whether the migration is viable, how long it will take, what technology it will require and what dangers lie ahead.

It is advisable to perform a pre-migration impact assessment to verify the cost and likely outcome of the migration. The later you plan on doing this the greater the risk so score accordingly.

Don’t worry, you’re not alone, most projects are based on previous project estimates at best or optimistic guesswork at worst.

Once again, your pre-migration impact assessment should provide far more accurate analysis of cost and resource requirements so if you have tight deadlines, a complex migration and limited resources make sure you perform a migration impact assessment asap.

It makes perfect sense to inform the relevant data stakeholders and technical teams of their forthcoming commitments before the migration kicks off.

It can be very difficult to drag a subject matter expert out of their day job for a 2-3 hours analysis session once a week if their seniors are not onboard, plus by identifying what resources are required in advance you will eliminate the risk of having gaps in your legacy or target skillset.

In addition, there are numerous aspects of the migration that require business sign-off and commitment.Get in front of sponsors and stakeholders well in advance and ensure they understand AND agree to what their involvement will be.

I have wonderful memories of one migration where we thought everything was in place so we kicked off the project and then was promptly shut down on the very first day.

We had assumed that the security measures we had agreed with the client project manager were sufficient, however we did not reckon on the corporate security team getting in on the action and demanding a far more stringent set of controls that caused 8 weeks of project delay.

Don’t make the same mistake, obtain a formal agreement from the relevant security governance teams in advance. Simply putting your head in the sand and hoping you won’t get caught out is unprofessional and highly risky given the recent loss of data in many organisations.

Don’t start your project hoping that Jobserve.com will magically provision those missing resources you need.

I met a company several months ago who decided they did not require a lead data migration analyst because the “project plan was so well defined”. Suffice to say they’re now heading for trouble as the project spins out of control so make sure you understand precisely what roles are required on a data migration.

Also ensure you have a plan for bringing those roles into the project at the right time.

For example, there is a tendency to launch a project with a full contingent of developers armed with tools and raring to go. This is both costly and unnecessary. A small bunch of data migration, data quality and business analysts can perform the bulk of the migration discovery and mapping well before the developers get involved, often creating a far more successful migration.

So the lesson is to understand the key migration activities and dependencies then plan to have the right resources available when required.

Data migrations do not suit a waterfall approach yet the vast majority of data migration plans I have witnessed nearly always resemble a classic waterfall design.

Agile, iterative project planning with highly focused delivery drops are far more effective so ensure that your overall plan is flexible enough to cope with the likely change events that will occur.

In addition, does your project plan have sufficient contingency? 84% of migrations fail or experience delay, are you confident that yours won’t suffer the same consequences?

Ensure you have sufficient capacity in your plan to cope with the highly likely occurrence of delay.

Project initiation will be coming at you like a freight train soon so ensure that all your resources know what is expected of them.

If you don’t have an accurate set of tasks and responsibilities already defined it means that you don’t know what your team is expected to deliver and in what order. Clearly not an ideal situation.

Map out the sequence of tasks, deliverables and dependencies you expect to be required and then assign roles to each activity. Check your resource list, do you have the right resources to complete those tasks?

This is an area that most projects struggle with so by clearly understanding what your resources need to accomplish will help you be fully prepared for the project initiation phase.

This is an extension of the previous point but is extremely important.

Most project plans will have some vague drop dates or timelines indicating when the business or technical teams require a specific release or activity to be completed.

What this will not show you is the precise workflow that will get you to those points. This needs to be ideally defined before project inception so that there is no confusion as you move into the initiation phase.

It will also help you identify gaps in your resourcing model where the necessary skills or budgets are lacking.

Data migration projects typically require a lot of additional tools and project support platforms to function smoothly.

Ensure that all your training materials and education tools are tested and in place prior to project inception.

Ideally you would want all the resources to be fully trained in advance of the project but if this isn’t possible at least ensure that training and education is factored into the plan.

Data migration projects create a lot of resource materials. Profiling results, data quality issues, mapping specifications, interface specifications – the list is endless.

Ensure that you have a well defined and tested configuration management approach in place before project inception, you don’t want to be stumbling through project initiation trying to make things work, test them in advance first and create the necessary training materials.

If your project is likely to involve 3rd parties and cross-organisational support it pays to use a dedicated product for managing all the communications, materials, planning and coordination on the project.

It will also make your project run smoother if this is configured and ready prior to project initiation.

How will project staff be expected to handle data securely? Who will be responsible for signing off data quality rules? What escalation procedures will be in place?

There are a multitude of different policies required for a typical migration to run smoothly, it pays to agree these in advance of the migration so that the project initiation phase runs effortlessly.

During this phase you need to formalise how each stakeholder will be informed. We may well have created an overall policy beforehand but now we need to instantiate it with each individual stakeholder.

Don’t create an anxiety gap in your project, determine what level of reporting you will deliver for each type of stakeholder and get agreement with them on the format and frequency. Dropping them an email six months into the project that you’re headed for a 8 week delay will not win you any favours.

To communicate with stakeholders obviously assumes you know who they are and how to contact them! Record all the stakeholder types and individuals who will require contact throughout the project.

Now is the time to get your policies completed and circulated across the team and new recruits.

Any policies that define how the business will be involved during the project also need to be circulated and signed off.

Don’t assume that everyone knows what is expected of them so get people used to learning about and signing off project policies early in the lifecycle.

If you have followed best-practice and implemented a pre-migration impact assessment you should have a reasonable level of detail for your project plan. If not then simply complete as much as possible with an agreed caveat that the data will drive the project. I would still recommend carrying out a migration impact assessment during the initiation phase irrespective of the analysis activities which will take place in the next phase.

You cannot create accurate timelines for your project plan until you have analysed the data.

For example, simply creating an arbitrary 8 week window for “data cleansing activities” is meaningless if the data is found to be truly abysmal. It is also vital that you understand the dependencies in a data migration project, you can’t code the mappings until you have discovered the relationships and you can’t do that until the analysis and discovery phase has completed.

Also, don’t simply rely on a carbon copy of a previous data migration project plan, your plan will be dictated by the conditions found on the ground and the wider programme commitments that your particular project dictates.

This should ideally have been created before project initiation but if it hasn’t now is the time to get it in place.

There are some great examples of these tools listed over at our sister community site here:

During this phase you must create your typical project documentation such as risk register, issue register, acceptance criteria, project controls, job descriptions, project progress report, change management report, RACI etc.

They do not need to be complete but they do need to be formalised with a process that everyone is aware of.

Project initiation is a great starting point to determine what additional expertise is required.

Don’t leave assumptions when engaging with external resources, there should be clear instructions on what exactly needs to be delivered, don’t leave this too late.

At this phase you should be meticulously planning your next phase activities so ensure that the business and IT communities are aware of the workshops they will be involved in.

Don’t assume that because your project has been signed off you will automatically be granted access to the data.

Get approvals from security representatives (before this phase if possible) and consult with IT on how you will be able to analyse the legacy and source systems without impacting the business. Full extracts of data on a secure, independent analysis platform is the best option but you may have to compromise.

It is advisable to create a security policy for the project so that everyone is aware of their responsibilities and the professional approach you will be taking on the project.

What machines will the team run on? What software will they need? What licenses will you require at each phase? Sounds obvious, not for one recent project manager who completely forgot to put the order in and had to watch 7 members of his team sitting idly by as the purchase order crawled through procurement. Don’t make the same mistake, look at each phase of the project and determine what will be required.

Model re-engineering tools? Data quality profiling tools? Data cleansing tools? Project management software? Presentation software? Reporting software? Issue tracking software? ETL tools?

You will also need to determine what operating systems, hardware and licensing is required to build your analysis, test, QA and production servers. It can often take weeks to procure this kind of equipment so you ideally need to have done this even before project initiation.

A data dictionary can mean many things to many people but it is advisable to create a simple catalogue of all the information you have retrieved on the data under assessment. Make this tool easy to search, accessible but with role-based security in place where required. A project wiki is a useful tool in this respect.

At this stage you will not have a complete source-to-target specification but you should have identified the high-level objects and relationships that will be linked during the migration. These will be further analysed in the later design phase.

It is important that you do not fall foul of the load-rate bottleneck problem so to prevent this situation ensure that you fully assess the scope and volume of data to be migrated.

Focus on pruning data that is historical or surplus to requirements (see here for advice). Create a final scoping report detailing what will be in scope for the migration and get the business to sign this off.

There will be many risks discovered during this phase so make it easy for risks to be recorded. Create a simple online form where anyone can add risks during their analysis, you can also filter them out later but for now we need to gather as many as possible and see where any major issues are coming from.

If you’ve been following our online coaching calls you will know that without a robust data quality rules management process your project will almost certainly fail or experience delays.

Understand the concept of data quality rules discovery, management and resolution so you deliver a migration that is fit for purpose.

The data quality process is not a one-stop effort, it will continue throughout the project but at this phase we are concerned with discovering the impact of the data so decisions can be made that could affect project timescales, deliverables, budget, resourcing etc.

Now is the time to begin warming up the business to the fact that their beloved systems will be decommissioned post-migration. Ensure that they are briefed on the aims of the project and start the process of discovering what is required to terminate the legacy systems. Better to approach this now than to leave it until later in the project when politics may prevent progress.

These models are incredibly important for communicating and defining the structure of the legacy and target environments.

The reason we have so many modelling layers is so that we understand all aspects of the migration from the deeply technical through to how the business community run operations today and how they wish to run operations in the future. We will be discussing the project with various business and IT groups so the different models help us to convey meaning for the appropriate community.

Creating conceptual and logical models also help us to identify gaps in thinking or design between the source and target environments far earlier in the project so we can make corrections to the solution design.

Most projects start with some vague notion of how long each phase will take. Use your landscape analysis phase to determine the likely timescales based on data quality, complexity, resources available, technology constraints and a host of other factors that will help you determine how to estimate the project timelines.

By the end of this phase you should have a thorough specification of how the source and target objects will be mapped, down to attribute level. This needs to be at a sufficient level to be passed to a developer for implementation in a data migration tool.

Note that we do not progress immediately into build following landscape analysis. It is far more cost-effective to map out the migration using specifications as opposed to coding which can prove expensive and more complex to re-design if issues are discovered.

At the end of this stage you should have a firm design for any interface designs that are required to extract the data from your legacy systems or to load the data into the target systems. For example, some migrations require change data capture functionality so this needs to be designed and prototyped during this phase.

This will define how you plan to manage the various data quality issues discovered during the landscape analysis phase. These may fall into certain categories such as:

The following article by John Platten of Vivamex gives a better understanding on how to manage cleansing requirements: Cleanse Prioritisation for Data Migration Projects – Easy as ABC?

At this stage you should have a much firmer idea of what technology will be required in the production environment.

The volumetrics and interface throughput performance should be known so you should be able to specify the appropriate equipment, RAID configurations, operating system etc.

At this phase it is advisable to agree with the business sponsors what your migration will deliver, by when and to what quality.

Quality, cost and time are variables that need to be agreed upon prior to the build phase so ensure that your sponsors are aware of the design limitations of the migration and exactly what that will mean to the business services they plan to launch on the target platform.

The team managing the migration execution may not be the team responsible for coding the migration logic.

It is therefore essential that the transformations and rules that were used to map the legacy and target environments are accurately published. This will allow the execution team to analyse the root-cause of any subsequent issues discovered.

It is advisable to test the migration with data from the production environment, not a smaller sample set. By limiting your test data sample you will almost certainly run into conditions within the live data that cause a defect in your migration at runtime.

Many projects base the success of migration on how many “fall-outs” they witness during the process. This is typically where an item of data cannot be migrated due to some constraint or rule violation in the target or transformation data stores. They then go on to resolve these fall-outs and when no more loading issues are found carry out some basic volumetric testing.

“We had 10,000 customers in our legacy system and we now have 10,000 customers in our target, job done”.

We recently took a call community member based in Oman. Their hospital had subcontracted a data migration to a company who had since completed the project. Several months after the migration project they discovered that many thousands of patients now had incomplete records, missing attributes and generally sub-standard data quality.

It is advisable to devise a solution that will independently assess the success of the execution phase. Do not rely on the reports and stats coming back from your migration tool as a basis for how successful the migration was.

I advise clients to vet the migration independently, using a completely different supplier where budgets permit. Once the migration project has officially terminated and those specialist resources have left for new projects it can be incredibly difficult to resolve serious issues so start to build a method of validating the migration during this phase, don’t leave it until project execution, it will be too late.

Following on from the previous point, you need to create a robust reporting strategy so that the various roles involved in the project execution can see progress in a format that suits them.

For example, a migration manager may wish to see daily statistics, a migration operator will need to see runtime statistics and a business sponsor may wish to see weekly performance etc.

If you have created service level agreements for migration success these need to be incorporated into the reporting strategy so that you can track and verify progress against each SLA.

Data quality is continuous and it should certainly not cease when the migration has been delivered as there can be a range of insidious data defects lurking in the migrated data previously undetected.

In addition, the new users of the system may well introduce errors through inexperience so plan for this now by building an ongoing data quality monitoring environment for the target platform.

A useful tool here is any data quality product that can allow you to create specific data quality rules, possesses matching functionality and also has a dashboard element.

What if the migration fails? How will you rollback? What needs to be done to facilitate this?

Hope for the best but plan for the worst case scenario which is an failed migration. This can often be incredibly complex and require cross-organisation support so plan well in advance of execution.

By now you should have a clear approach, with full agreement, of how you will decommission the legacy environment following the migration execution.

The team running the execution phase may differ to those on the build phase, it goes without saying that the migration execution can be complex so ensure that the relevant training materials are planned for and delivered by the end of this phase.

It is rare that all data defects can be resolved but at this stage you should certainly know what they are and what impact they will cause.

The data is not your responsibility however, it belongs to the business so ensure they sign off any anticipated issues so that they are fully aware of the limitations the data presents.

Some migrations can take a few hours, some can run into years.

You will need to create a very detailed plan for how the migration execution will take place. This will include sections such as what data will be moved, who will sign-off each phase, what tests will be carried out, what data quality levels are anticipated, when will the business be able to use the data, what transition measures need to be taken.

This can become quite a considerable activity so as ever, plan well in advance.

This is particularly appropriate on larger scale migrations.

If you have indicated to the business that you will be executing the migration over an 8 week period and that specific deliverables will be created you can then map that out in an excel chart with time points and anticipated volumetrics.

As your migration executes you can then chart actual vs estimated so you can identify any gaps.